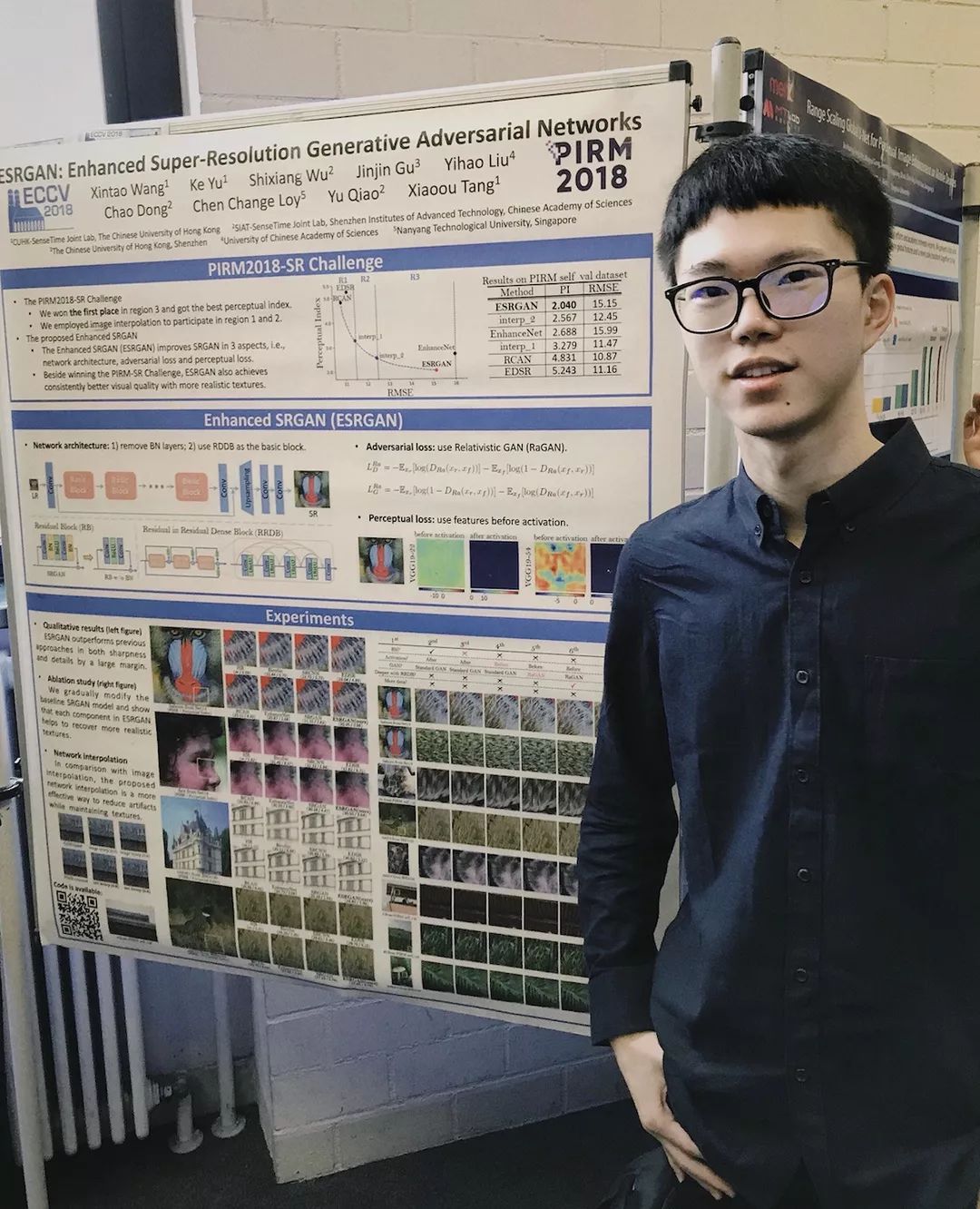

Paper Published On ECCV 2018 by SSE Student Jinjin Gu

Recently, Jinjin Gu, a senior SSE student from The Chinese University of Hong Kong, Shenzhen and his team at SenseTime Research and The CUHK Multimedia Laboratory published a paper on ECCV 2018, the top conference of computer science. In this paper, they introduce the concept of the Enhanced Super-Resolution Generative Adversarial Networks (ESRGAN). What's even more exciting is that the ESRGAN model also won the first place with the best perceptual score in the PIRM2018-SR Challenge.

Publication Conference: European Conference on Computer Vision 2018 (ECCV 2018)

Conference Introduction:

ECCV stands for the European Conference on Computer Vision with an annual paper acceptance rate of about 25-30%. Each year, ECCV catalogues about 300 papers worldwide, most of which come from the top laboratories and research institutes in the US and Europe, and about 10 to 20 of them are from China. The 2018 European Conference on Computer Vision (ECCV 2018) was held in Munich, Germany. Held biannually, The ECCV is known as the top three academic conferences together with CVPR and ICCV in the field of computer vision.

Title: ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks

Abstract:

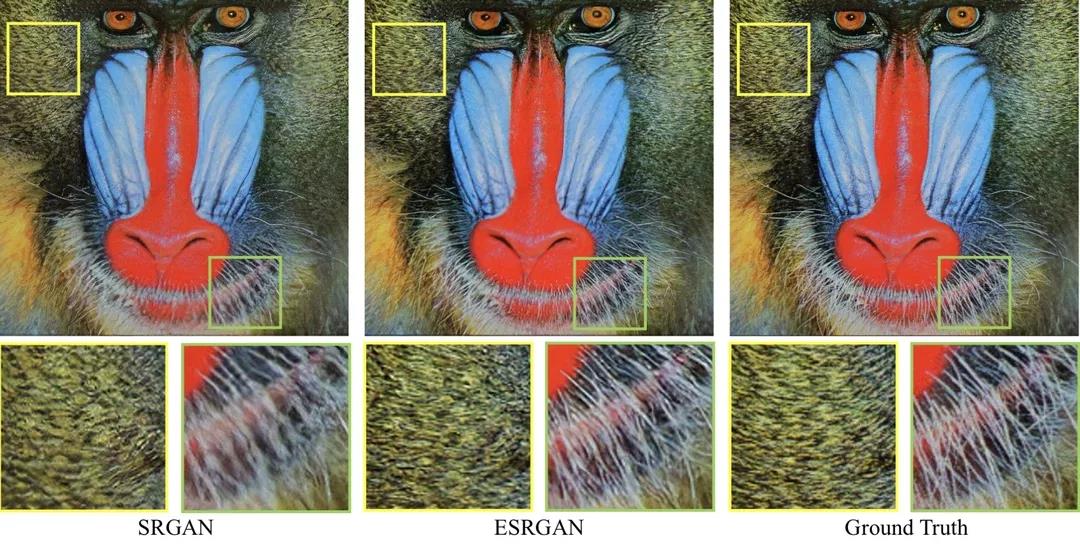

The Super-Resolution Generative Adversarial Network (SRGAN) is a seminal work that is capable of generating realistic textures during single image super-resolution. However, the hallucinated details are often accompanied with unpleasant artifacts. To further enhance the visual quality, we thoroughly study three key components of SRGAN - network architecture, adversarial loss and perceptual loss, and improve each of them to derive an Enhanced SRGAN (ESRGAN). In particular, we introduce the Residual-in-Residual Dense Block (RRDB) without batch normalization as the basic network building unit. Moreover, we borrow the idea from relativistic GAN to let the discriminator predict relative realness instead of the absolute value. Finally, we improve the perceptual loss by using the features before activation, which could provide stronger supervision for brightness consistency and texture recovery. Benefiting from these improvements, the proposed ESRGAN achieves consistently better visual quality with more realistic and natural textures than SRGAN and won the first place with the best perceptual score in the PIRM 2018-SR Challenge.

Profile

Jinjin Gu

Senior student at the School of Science and Engineering and the Shaw College

Computer Science and Engineering

Graduated from Tanggu No. 1 Middle School, Binghai New Area, Tianjin

Homepage http://www.jasongt.com/

Jinjin Gu is currently a senior student studying in the School of Science and Engineering, The Chinese University of Hong Kong, Shenzhen and majoring in Computer Science and Engineering. He is a research assistant of the Lab of Energy Internet and a research intern at the SenseTime Research. He is also one of the founders of the CUHK-Shenzhen Computer Association. Before that, he was a research assistant at the Institute of Image Communication and Network Engineering in Shanghai Jiao Tong University. His research interests lie primarily in the theory and application of machine learning, including the representation learning, manifold learning and the application of information geometry in machine learning. He is also interested in the application of machine learning approaches in computer vision, including learning-based image and video processing, image and 3D segmentation and super resolution perception and manipulation of industrial sensor.

Dialogue

Q1: Could you introduce your contribution to this paper?

A1: My paper's title is ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks, which mainly talks about perceptual image super-resolution reconstruction. This article systemically analyzes assembly units that apply generative adversarial networks to image super-resolution including network structure, adversarial loss function, and perceptual loss function. And it proposes some approaches for improvement, that is, the ESRGAN. Our team has made some rather long-term efforts in frontier and landing researches, and the treatise resembles a brief of it. The final method's performance is inseparable from everyone's long-term engineering practice, I assume.

Q2: Could you tell us about the positive influence on you from your fellows in doing the research?

A2: The greatest influence on me from my fellows of SenseTime and the CUHK Multimedia Laboratory is to be patient and finish the research on a firm rooting. As both CV and AI industry are on the front line, the new comer might follow suit and pay too much attention to fancy ideas in the first two years in his or her study. But joining SenseTime and being exposed to the most frontier research is a good discipline, you’ll see that under the demand of commercialization and productization, ‘fancy’ doesn’t work at all; the basic requirement is ‘work’, which can’t be achieved by even many issued articles. A quite notable point I’ve learned from Sense Time is that research is for solution, not for excellent paper. During the study, I’ve received plenty of helps from my partners and teachers from SenseTime Technology, Multimedia Laboratory of CUHK and Shenzhen Institutes of Advanced Technology.

Q3: Could you introduce some exciting programs underway by SenseTime?

A3: I am with Human Cyber Physical Intelligence Integration Lab, SenseTime Institute. My research group is improving the research and productization of the cutting-edge artificial intelligence image processing algorithm, consisting of image denoising, super-resolution reconstruction, image deblurring, image generation, etc. Lots of domestically renowned brand mobile phones are adopting our AI smart camera algorithm. I’m mostly responsible for academic research and leading a certain program, as well as concentrate on academic issues in urgent need of solution, then write it down in paper to communicate with peers. We boast many other groups that are promoting frontier studies and productization of algorithm in diverse research areas relating to vision. Sense Time covers such a wide range of research that it hosts research groups in every vision-relating direction, thus landing one cutting-edge technology after another. We are all proud of that.

Q4: What is your academic goal in the future?

A4: My personal research falls into two aspects. One is the research of image processing, containing more advanced and intelligent perceptual image super-resolution and advance algorithm landing; the other is the application of advanced generative models in industry. Actually, this treatise is a milestone achieved in perceptual image super-resolution where I will work on something fancier later. In image processing algorithm, I focus on blind spots in practical problems, for example, processing complicates unknown noise in a realistic environment. We have solved some really important practical problems and applied the algorithm to mobile phones. Next year, my goal is mainly to summarize existing technology breakthrough into complete academic work and publish it. Another research of mine is the super-resolution perception of industrial sensor, which is conducted in Energy Internet Laboratory of CUHK. We focus on the usage of edge artificial intelligent algorithm to enable industrial system, furthering the fulfillment industrial informatization without the need to widely update existing sensors. The preprint of first article in this field appears on the arXiv.org preprint server, and we welcome your attention to it and following researches in the future. The treatise's title is Super-Resolution Perception of Industrial Sensor Data.

Q5: What is the biggest help you've received during the three years in The School of Science and Engineering?

A5: As a SSE student, I'm grateful to Prof. Zhao Junhua for providing me with tremendous helps when I started my research. In his laboratory, not only have I learned professional knowledge but more importantly, I've learned how to conduct research properly. It is Prof. Zhao's guidance on cultivating forward-looking method and perspective that helped me conducting independent studies at SenseTime.

Q6: What is your advice to those freshmen who want to conduct scientific research?

A6: Firstly, you should have enough initiative. Because research entails intensive curiosity, creative thinking, and plenty of time in reviewing papers and experiment. During the experiment, no one will constantly push you but yourself. It also requires a long-term endurance to achieve certain achievement.

Secondly, don't be obsessed with utilitarianism, especially in fields like AI or CV. These areas attach great importance to paper and indeed witness lots of fancy papers delivered every year. Lots of students may be eager to write a treatise for university or job application, however, you should understand that the quality of your work is extremely relevant to your mindset. If you are all about issuing your paper, your work quality will be undoubtedly poor and you may even miss the issuance time. But if you truly want to solve a problem and finally succeed after the long-term research and perfection, and that's what you are supposed to be proud of. Many students might appreciate those who published their papers on ECCV or CVPR, yet the true researchers only focus on the problem that being solved. To those whose work only relies on publication of cook and trick rather than deal with real problems, they should feel ashamed.

Last but not least, stay patient. I've seen many students who want to engage in AI or CV. However, most of them are only following other classmates and feel like it is necessary for them to do the same thing too. Don't just follow suit. Students, particularly younger ones, should make assessment and get started at what you will work on as soon as possible instead of blindly following others. Artificial intelligence is not limited within CV and NLP but also covers a great number of fields worthy of attention. And you should choose what you want to do with your unique insight.

Words and typesetting: Shi Tianyu (sophomore at the SSE and the Shaw College)